5 Common A/B Testing Mistakes Every Shopify Store Owner Needs to Know

Casey Brown | May 12, 2020

Reading Time: 11 minutesAs ecommerce business owners, we are constantly looking for ways to maximize our store’s revenue. In an effort to “squeeze more juice” out of our websites, we are repeatedly making little tweaks to them—changing a headline here, swapping out some images there, and so on. But are these little tweaks hurting or helping the performance of our ecommerce businesses?

Well, unless you’re measuring the data via A/B testing, it’s impossible to know.

One of the most valuable tools in any revenue optimizer’s tool kit is proper execution of A/B testing. Yet everywhere I look, I see mistakes—huge mistakes that, in fact, make the data completely worthless.

Quick definition: An A/B test is when you produce two or more variations of a website, split the website traffic among those variations equally, and choose a winner based on which one produces the most net profit.

People seem to have a fundamental misunderstanding of several principles of A/B testing. I’ll often see peers of mine touting their latest wins on social media based on a test they ran on their websites literally a day or two before.

“Interesting results,” I’ll say to them, “Are you sure the data you’re posting is accurate?”

“Yes,” they’ll say, “because after I changed the headline, I saw an increase in conversion rate the next day. So I know it worked.”

Usually, I’ll just drop it right there.

But if I know them a little better and feel they are mature enough to take the criticism, I’ll say something like, “Well, have you ever had a particular day where your Facebook ads just seemed to perform extra well for God knows what reason? Ever noticed that weekends typically do better than weekdays?

Anything can affect conversion rate on a day-to-day basis—heck, even the rain can affect it. Comparing today’s conversion rate to yesterday’s doesn’t mean much. Date-range comparisons are extremely unreliable.

Then, I usually explain to them the concept of statistical significance and how their results aren’t actually accurate because the sample size is too small.

This is by far the #1 mistake I see Shopify store owners make in regard to split testing (aka A/B testing).

Keep reading, and I’ll tell you four more of the biggest mistakes I see and how to fix them.

How to Get Clear, Unbiased Answers: A/B Testing

As I already stated earlier, the only way to get 100 percent definitive answers about whether the changes you’re making to your website are helping or hurting is to test those changes. So, for those of us that haven’t done this before, how do we get started?

To get started with A/B testing, you need the following:

- A/B testing software

- Basic knowledge of how to run an A/B test

First, I’ll talk about the software, because that’s the easiest part.

I like two tools:

- Paid: Convert

- Free: Google Optimize

If you’ve got the budget, I highly recommend jumping right in with Convert. They offer a 30-day free trial, they have excellent support, and the tool just works amazingly well.

Google Optimize is free, but it has absolutely zero support (it’s a free tool after all). However, it does do the job just fine for beginners. You’ll definitely want to hire someone to help you install it on your Shopify store; it’s a surprisingly huge pain in the rear.

There are plenty of others out there, such as VWO and Optimizely; however, I didn’t have a positive experience with either of these. They pale in comparison to Convert, in my opinion.

Whichever tool you go with is fine by me, but I love Convert.

Now, on to the fun stuff: The following list of “Don’ts” is a quick guide to help you shave months off your A/B-testing learning curve. A whole book could be written on the subject, but for now, this will serve as an easy reference guide for the basics. Follow these principles below, and you’ll have mastered the 80/20 of A/B testing!

The 5 “Don’ts” of A/B Testing

- Don’t use a small sample size. This is by far the most common mistake I see. Nothing makes me cringe more than seeing a colleague post on their Facebook page, “Hey guys, I just tested xyz, and look at the results—30% increase in conversion rate!!”

Right off the bat, know that a 30% increase in conversion rate off a single test is a red flag. If the store in question has never been optimized before then, sure—it’s possible to get a 30% win off a single test. But for any store that’s isn’t a complete mess, this percentage increase is highly unlikely.

Digging deeper, I click the screenshot of the data he posted, and bingo!—I’ve found the problem: small sample size. He’s run 300 visitors to each variation at a 4% conversion rate, and he’s drawing his conclusion on this. This is not nearly enough traffic to conclude there’s a winner—in fact, about 20 to 30 times that amount of traffic is needed.

What he’s basically done is flipped a coin 10 times, gotten heads 7 of those 10 times, and concluded, “Heads wins 70% of the time. See, I tested it!” You can see the problem in the logic here.

Fortunately for all of us, there’s a foolproof way of making sure we don’t commit this egregious error: use a statistical significance calculator. There’s a ton out there if you google it, but I like Optimizely’s Statistical Significance Calculator (which is funny, because I don’t like their actual A/B testing software, haha).

The image above shows their calculator in action. I could write an entire article about how to use these types of calculators, but, for simplicity’s sake, I’ll just say this for now: only touch “Baseline Conversion Rate”—nothing else. Fill this in with the conversion rate of the page you are testing (not the entire store average!) and don’t worry about Minimum Detectable Effect or Statistical Significance. Leave those alone. Whatever the calculator tells you is the sample size you need, use that. It’s simple.

Note: If your store gets tons of traffic and has a high conversion rate, you can lower Minimum Detectable Effect from 20% to 10% to get a more accurate result, but for 99% of you reading this, this does not apply. This is only for stores doing tons of volume.

- Don’t test dumb stuff. The new, wide-eyed A/B tester can quickly get carried away with wanting to test every little thing. While I admire this spirit, there is a fine line between (1) wanting to be scientific and run a lot of tests and (2) disregarding common sense.

For example, testing any of the following would qualify as “testing dumb stuff”:

- Comparing an orange ATC button to a green ATC Button

- Saying “American Owned” versus “USA Owned and Operated”

- Displaying the price in red font versus green font

- Testing prices of $19.99, $19.97, and $19.95

They say “fortune favors the bold,” and this is more true in the world of A/B testing than it is anywhere else. Moving a few pixels around here and there is not going to get you the “wins” you’re after.

Think about it, how could it?

You haven’t added any new features that improve persuasiveness, credibility, trust, or authority. You haven’t handled any new objections or explained the value of the product more clearly or anything like that.

Nobody ever went to a website and said, “I had some doubts and wasn’t going to buy initially … but then I realized the ATC button was orange instead of green and decided, ‘Oh man—I have to have this.’”

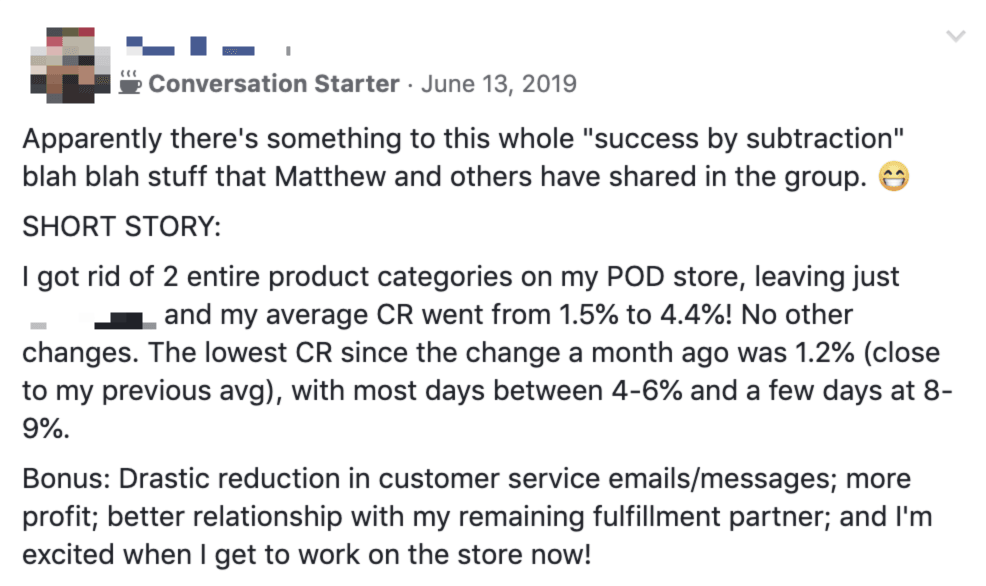

As you can see, “testing dumb stuff” (not testing boldly enough) resulted in absolutely zero change, even after 25,000 total visitors.

While I certainly admire the thorough attention to detail of wanting to test every little thing, the bottom line is that it’s a waste of time.

Here’s another example: Changing the headline from “World’s Best Dog Leash” to “Best Dog Leash in the World” isn’t going to move the needle. They’re too similar. You need to test bolder, more drastic changes if you want to see the needle move.

So make those big and bold changes, and test those. I am writing an article that will give you ideas and inspiration about exactly what to test, but for now, here are some pointers to get you started:

- Test headlines: Try testing a new and completely different value proposition in your headline, like “World’s most durable dog leash” versus “The only dog leash that trains your dog not to pull.”

- Test the offer: Test by offering one for $30 versus two for $50.

- Test the risk reversal: Try offering a full money-back guarantee versus just offering free size exchanges.

- Test your objection handlers: Deal with common objections in a new and different way. You can address doubts about product effectiveness by testing one version of the page that uses a lot of social proof (such as reviews) from customers versus a second version that features an endorsement by a popular niche celebrity.

- Test adding a charitable component: For example, you could try something like: “We donate 10% of profits to animal shelters.” Is the increase in conversion rate worth the extra 10% of profits you now need to give away? It’s important to know your numbers.

- Test the images: Test to compare before and after photos, lifestyle images, images that show the product in use, images that handle objections, images that demonstrate the features, and so on.

- Test the site navigation: Rather than having customers browse by interest (nursing, yoga, cooking), test by giving them a different type of navigation where they have to browse by product type first (shirt, mug, pillow).

- Test the price: Try $39.99 versus $49.99. (Note: price-split tests are extremely complex and not for newbies!)

Test big and bold. A lazy person’s testing strategy gets you a lazy person’s results. It’s a lot of work to code drastically different web pages and test them, but the results are well worth it.

- Don’t run tests that are not based on a strong, data-driven hypothesis. Your criteria for running an A/B test should be that you can confidently complete the following sentence: “I believe that making this change will make the website more persuasive because _____.”

If you can’t fill in the blank with a strong, data-driven hypothesis, you’re not likely to get the result you want.

Here are some ways you could fill in the blank to make the website more persuasive):

- … I noticed from survey data that customers are still unsure about xyz, and I think the extra clarity will help.

- … In Google Analytics, I noticed this page converts poorly due to xyz, and I think implementing xyz will fix this.

- … I was watching a website recording via Lucky Orange, and the users seemed to have trouble with xyz, and the redesign should solve many usability issues.

- … Our customer service agent told me that customers are confused by xyz, so I think the new photos showing the side-by-side comparison will address this.

- … Our competition is selling the product in xyz way, and they may know something that we don’t (that is, their way of selling may be more effective).

Notice that these examples are data driven and not opinions. They are all based on observational data of some kind, not a random idea that came to you. If the best you can come up with is, “I’ve got a hunch/feeling” … odds are, it’s going to be a loser.

- Don’t give up so easily if your test ends with a loss; use the concept of iterative testing. Building off of the previous point, we’re going to assume we have a strong data-driven hypothesis for a test. Let’s say our hypothesis is that people don’t trust our website and we’ve got survey data that confirms that the #1 thing that holds back our customers from purchasing is a lack of trust in the website.

Now, how many ways are there to remedy this objection? There is a concept within the world of A/B testing known as iterative testing. This means that even if the first solution we think of doesn’t win, we can iterate again and again until we find something that does work.

Getting back to this example: The issue is lack of trust. How many new web pages can we iterate that might remedy this? Here are a few:

- Find a niche celebrity to endorse the product.

- Add an “As Seen On” section for a list of news/media appearances in which the store has been featured.

- Add more social proof—reviews, video testimonials, and so on.

- Add more risk reversal—money-back guarantees and the like.

- Link to the product on Amazon so people can read reviews there, which are more trustworthy than reviews on your site. (That’s because the ones on your site can easily be faked.)

We could brainstorm hundreds of ways to improve the level of trust in our website, but this list will do for now.

In the example, let’s say we tried the first solution on that list—getting a niche celebrity to endorse the product. However, to our surprise, it’s resulted in a loss. “Celebrity influencers don’t work for this niche,” you might conclude. However, this would be a bit too hasty. Maybe it’s not that all celebrity influencers don’t work for your business; maybe it’s just the particular influencer you chose. The next one might be a home run.… So let’s say that in this hypothetical example, you find a new celebrity influencer, and voila! It is indeed the home run you’ve been looking for!

You’ve just learned a valuable lesson regarding iterative testing.

Given the caveat that you have a strong, data-driven hypothesis to begin with, if the first solution you tested didn’t result in a win, you can simply iterate new versions again and again and again.

There comes a logical point when you’ll want to throw in the towel, of course, but don’t give up too easily. Iterate a few different versions first and see if you can get a win on your second or third time around before giving up and trying something completely new.

Example

First Iteration (Loss)

Second Iteration (Win)

- Don’t waste valuable traffic-testing stuff that doesn’t need to be tested. The more statistically significant A/B tests you can run per calendar year, the better. And the main limiting factor to the number of tests you can run is simply website traffic. A/B tests require a lot of traffic, especially for ecommerce stores that generally have low conversion rates (the global industry average was about 2.9% in mid 2018).

So, unless you’re Amazon, you need to be selective about what you test.

In my two plus years of running A/B tests like a madman, I’ve noticed something: any time you make a change to your website to add extra clarity or address a new objection, it’s pretty much guaranteed to win. There’s no need to waste valuable traffic-testing when that traffic could be reserved for running other tests.

Here are a few examples to illustrate my point:

- Customers are expressing concerns over getting the wrong size, so you add to the copy, “We now offer free size exchanges in case it doesn’t fit!” You are addressing an objection here, and by offering free exchanges, you are reducing the risk to the customer of buying. There is no need to test this. You already know that customers are going to respond well.

- Customers are asking via your support chat if the product is machine washable. It is, so just add this to the descriptive copy. There’s zero need to test it.

- Customers are confused about the size of the product, so you add an image of the product in someone’s hands for scale. You’re clarifying the size of the product and giving the customers what they were asking for (more clarity), so don’t bother testing it.

I think you get the point. There is a fine line between trying to be thorough and testing everything to a ridiculous degree. So remember, any time you address a new objection or add something clarifying, don’t bother testing it. Save that traffic for something else.

Conclusion

If you can avoid these five common A/B testing mistakes, you’re already 80% of the way toward true mastery. This is the foundation on which to build the rest of your knowledge.

- Run your A/B tests until they reach a statistically significant number of visitors. Use a statistical significance calculator, and don’t stop the test until you’re sure the data is statistically significant.

- Don’t test moving a few pixels around. Be brave and test variations that are quite different from your original. Fortune favors the bold.

- Have a strong, data-driven hypothesis behind every test you run. Don’t test something because “you’ve got a good feeling about it.”

- Understand the concept of iterative testing. If you have a really strong hypothesis supporting your variation, but it loses in the test, iterate it a few more times in new ways before giving it up.

- Don’t test obvious stuff that doesn’t need to be tested. If you forgot to mention that your product is organic, just add that information to the description. Don’t waste time traffic-testing it. Of course “organic” is a good thing that people will respond well to. Save that traffic for testing something else that isn’t clearly

a win.

The whole point of getting A/B testing right is to help your ecommerce store make more money. The next thing you should do is head over to our six-part revenue optimization mega series, where we break down the optimization of each page of your store in extreme detail.

Resources

Chou, W. (n.d.). Average conversion rates by industry, ad provider, and more.

https://blog.alexa.com/average-conversion-rates/

Monetate. (2018). Monetate ecommerce quarterly report | Q2 2018 [PDF].

https://info.monetate.com/rs/092-TQN-434/images/EQ2_2018-The-Right-Recommendations.pdf

About the author

Casey Brown

Casey Brown has been with BGS since 2017. As a former Shopify store owner and full-time direct response marketer for many years, he understands the challenges experienced by “ecompreneurs”. Casey has earned the reputation for being a master A/B tester. Inspired by cold hard data, experience, and a little bit of gut instinct, it’s not uncommon for Casey to get clients huge wins quickly. According to Casey, waking up to this job and working with people you love is a luxury few are blessed with.